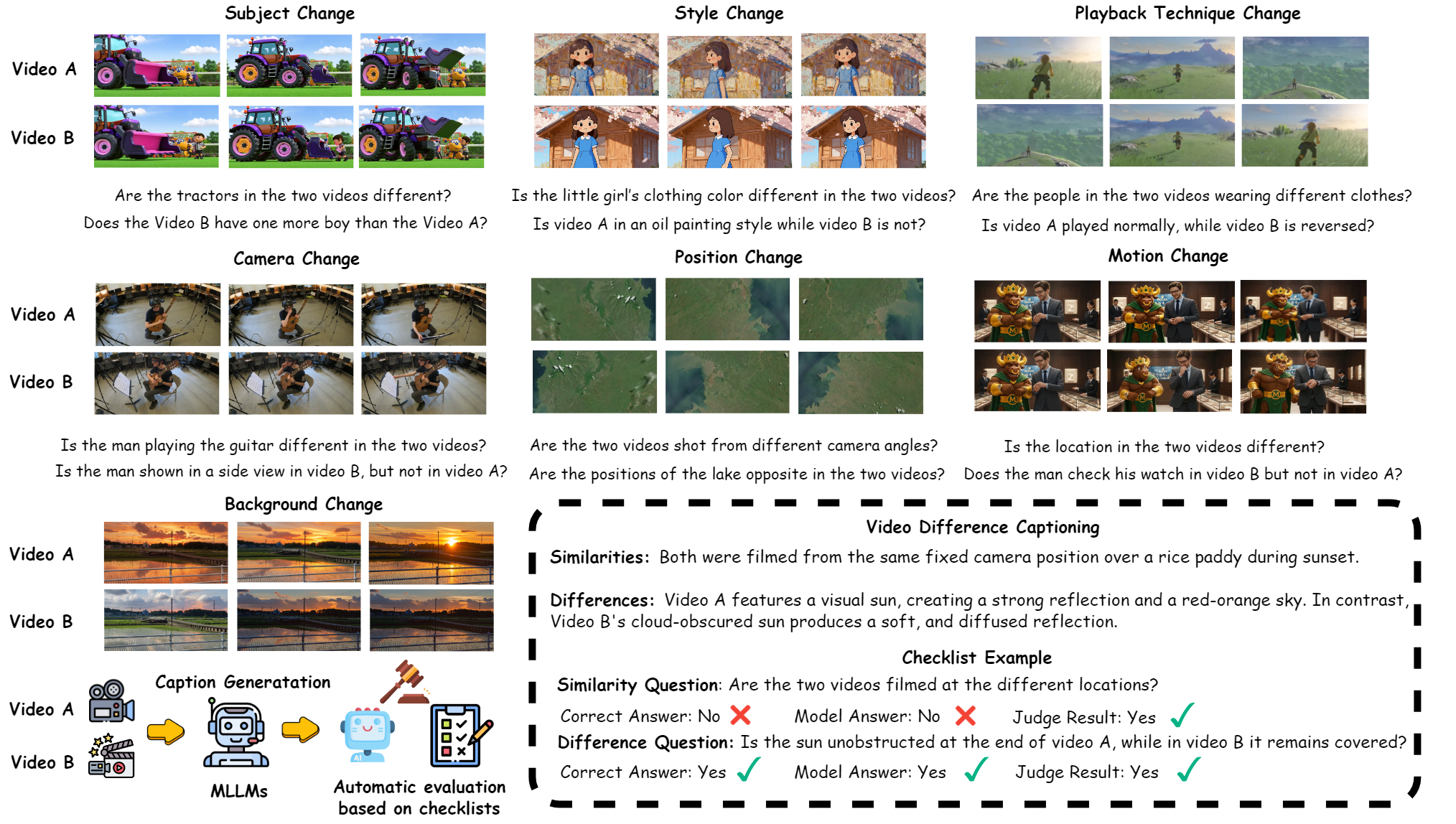

We evaluate video comparison using Accuracy over a set of Dual-Checklist questions.

Avg: Average Score

Diff: Difference Accuracy

Sim: Similarity Accuracy

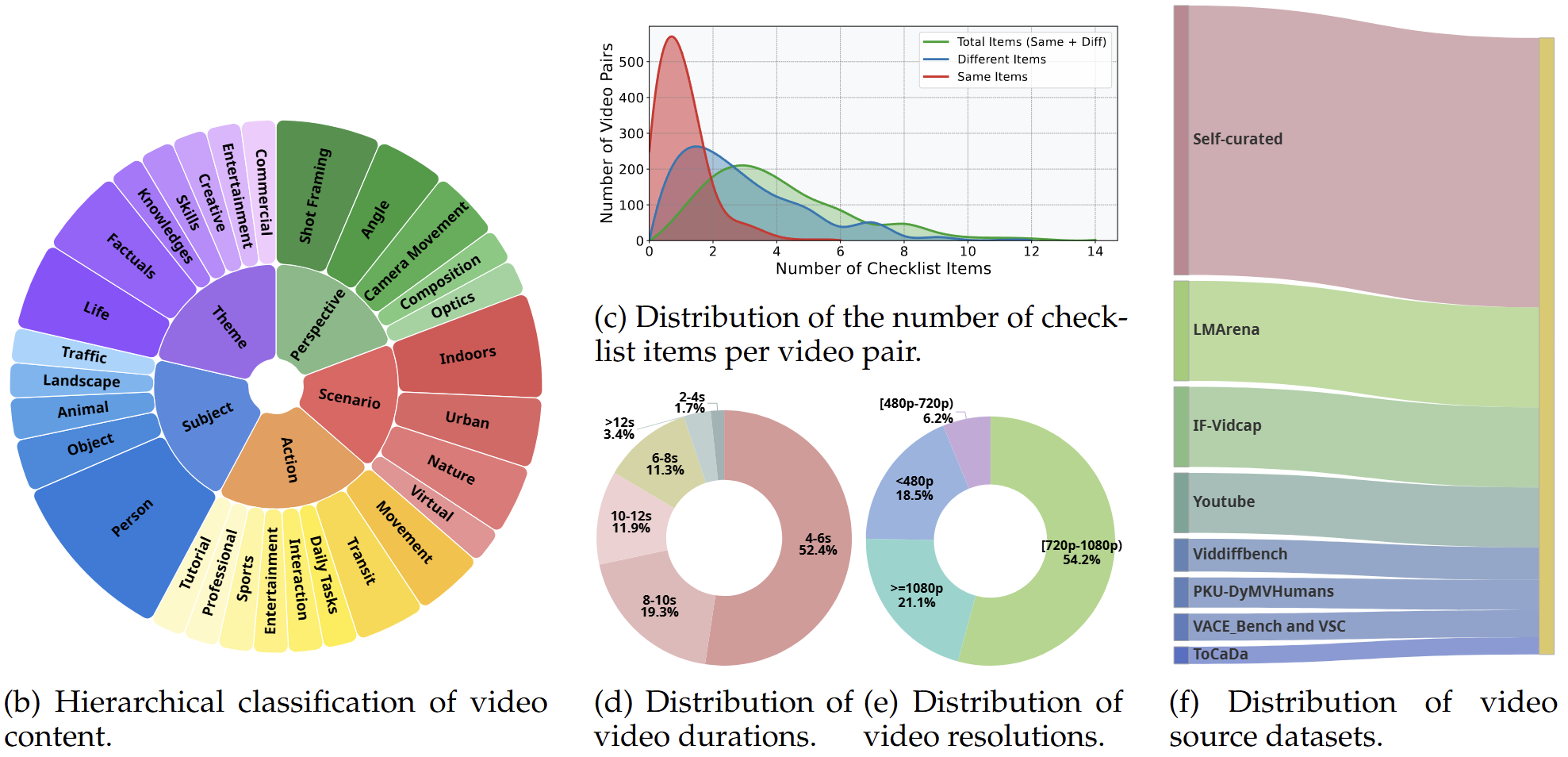

Fine-grained Categories:

Subj (Subject), Motion, Pos (Position), Backgr (Background), Cam (Camera Work), Style, Tech (Playback Technique).

💡 indicates specific "thinking" or reasoning modes activated in models.

| # | Model | Param | Overall Metrics (%) | Category Performance (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Avg | Diff. | Sim. | Subj | Motion | Pos. | Backgr. | Cam. | Style | Tech. | |||

| - | Gemini-2.5-Pro💡 | - | 66.72 | 63.73 | 75.33 | 67.71 | 62.78 | 68.24 | 70.65 | 59.97 | 75.79 | 74.32 |

| - | GPT-5💡 | - | 62.94 | 57.32 | 79.17 | 61.52 | 57.78 | 65.31 | 69.15 | 57.39 | 77.60 | 54.66 |

| - | Gemini-2.5-Flash💡 | - | 58.87 | 52.11 | 78.37 | 59.63 | 51.29 | 57.23 | 63.98 | 52.82 | 81.58 | 55.41 |

| - | Gemini-2.0-Flash💡 | - | 53.71 | 50.26 | 63.66 | 58.90 | 48.71 | 57.86 | 57.11 | 47.30 | 55.79 | 18.92 |

| - | GPT-4o💡 | - | 49.95 | 39.14 | 81.12 | 46.79 | 43.53 | 51.89 | 53.73 | 49.18 | 77.89 | 27.03 |

| - | Qwen3-VL-32B | 32B | 61.38 | 58.54 | 71.50 | 64.60 | 51.77 | 62.00 | 68.62 | 52.66 | 74.86 | 47.83 |

| - | InternVL-3.5💡 | 38B | 52.44 | 46.25 | 70.30 | 52.66 | 43.04 | 53.77 | 59.80 | 47.80 | 72.63 | 20.27 |

| - | Qwen2.5-VL-Inst | 72B | 49.71 | 42.56 | 70.30 | 48.07 | 44.82 | 48.11 | 55.92 | 46.42 | 68.95 | 22.97 |

| - | Mimo-VL-SFT | 7B | 52.59 | 46.51 | 70.17 | 54.39 | 46.55 | 51.25 | 57.31 | 48.37 | 67.71 | 25.33 |

| - | GLM-4.1V💡 | 9B | 40.95 | 33.99 | 61.08 | 42.60 | 34.35 | 38.13 | 47.26 | 33.83 | 64.58 | 14.67 |

| - | Llama-3.2 | 11B | 19.43 | 5.23 | 61.01 | 14.48 | 20.31 | 17.84 | 13.44 | 29.56 | 40.00 | 11.70 |